Google Image Search in the World of Generative AI Experiences

The importance of including great imagery to support your content

Incorporating engaging and informative images in your content marketing can enhance brand recall, reinforce your message, and highlight key points in your articles. Search engine result pages (SERPs) are becoming increasingly visual. Meaning that you cannot afford to neglect them.

Imagery can transform how your products are discovered and recommended; it assists in tailoring shopping experiences to specific audience preferences. Google image guidelines help you create the best-owned media to support your brand and, ultimately, revenue.

Table of Contents

- Google Image Search in the World of Generative AI Experiences

- Showcase Your Content with the Best Images

- Google Image Search Includes “About this Image”

- Why is Optimizing for Google Image Search Important?

- Resolving Image Downscaling and Object Fit Issues

- Image Structured Data Dives Targeted Web Traffic

- Google Vertex AI, Circle to Search, and Google Lens

- How Does Gemini Convert Image Text to JSON?

- Google Patents to Improve Relevance, Quality, and Diversity of Image Search Results

- More Ways to Improve Web Images

- How Images on Your Website Can Lead to CRO

- SUMMARY: Images in AI-powered Multisearch Experiences

Gen Z prefers using image search and image recognition tools. They can be more visually engaging than manual searches and getting a list of blue links. Many searchers are immersed in visual mediums.

91% of consumers prefer visuals more than plain text

According to VENNAGE, “91% of consumers prefer visuals more than plain text.” The 12.13.2023 16 Visual Content Marketing Statistics to Know for 2024 article by Krustle Wong sees this as why 56.2% of digital marketers assess visual content as a big deal in their marketing plan.

Wong also reports that an additional 23.8% feel their digital strategy is weak without visual content. By crafting unique, smart, eye-catching visuals for your articles, you help provide an emotional connection with people. I’m often surprised at how many of our client images win a backlink. Great owned media can improve your content’s shareability factor.

The essential task is to ensure that your images or videos are relevant to the content and are the sized correctly for JSON image search and featured snippets.

Showcase Your Content with the Best Images

Google encourages this. In fact, its new Search Generatitive Experience displays more images than traditional search.

“Find_matching_image, which is used in multi-image questions to find the image that best matches a given input phrase, is implemented by using BLIP text and image encoders to compute a text embedding for the phrase and an image embedding for each image. Then the dot products of the text embedding with each image embedding represent the relevance of each image to the phrase, and we pick the image that maximizes this relevance.” – Google Research: Modular visual question answering via code generation

Google Image Search is important to your success since these images also display:

- Site Name.

- Site Favicon.

- Page Title.

Images assist the searcher before making a click through to a website. Imagery provides a sense of how well the page may relate to the query intent.

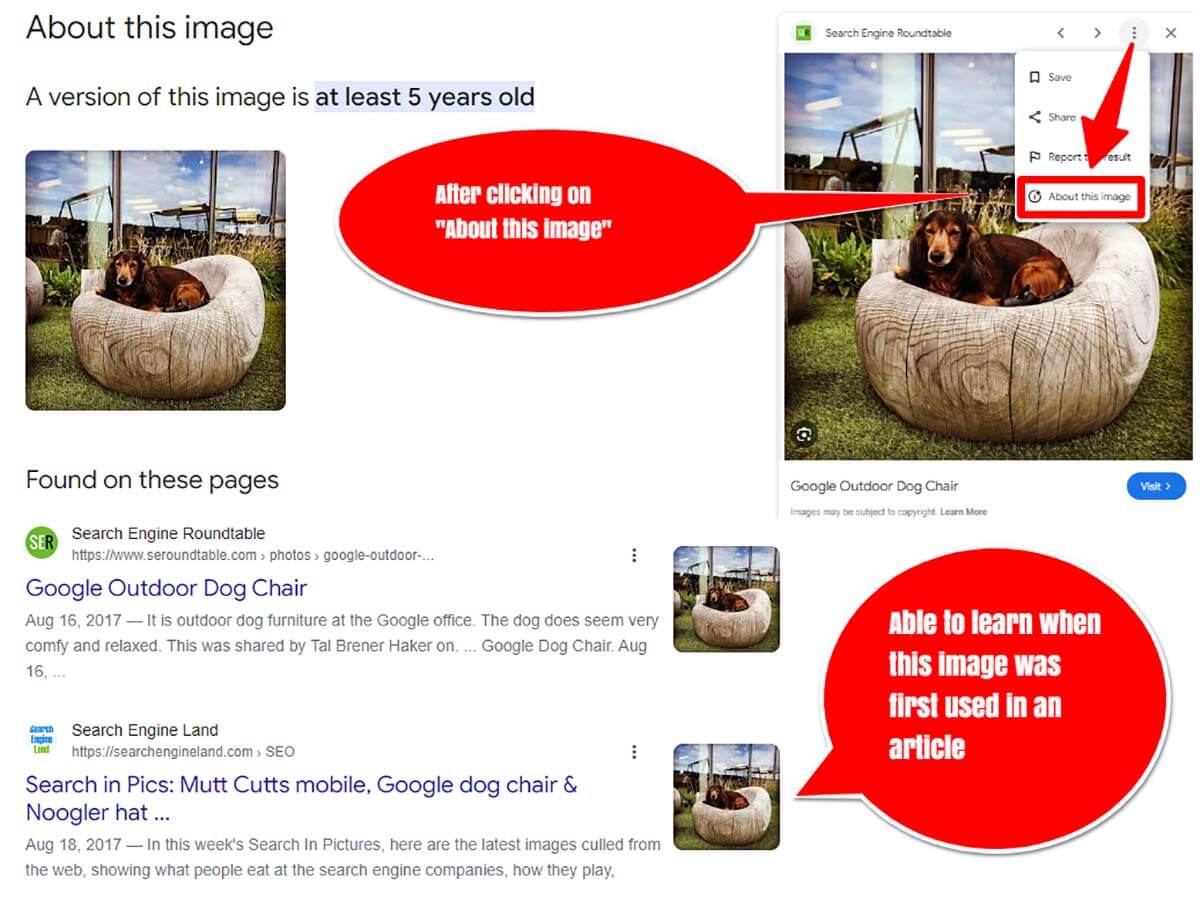

Google Image Search Includes “About this Image”

Searchers are getting savvier. These new image search capabilities support critical thinking. I like a level of healthy skepticism when it comes to online imagery – especially when searching for healthcare answers.

The “About this image” feature currently is not entirely a foolproof verification system. You may always want to cross-reference an image you find online from multiple trusted sources.

How to view “About this Image” data?

- Conduct an image search using the Google Images filter.

- Click on the three dots to the right of the image.

- Choose the “About this image” option.

This SERP feature provides you the means to spot fake, misleading, or manipulated images. Conducting marketing research lets you learn how a specific image is being used. Your competitive research can include studying “about this image” results to surface new ways to use images.

How is Google’s “About this image” feature useful?

- One reason is that it lets shoppers verify eye-catching product images. They can learn if it is from the brand they want to buy from when the image was initially published and if it is AI-modified. More capabilities are available for this use case, such as AI labels whether through self-disclosure or Meta detecting AI content.

- It gives you additional context about the image. It helps you determine if it is being used out of context.

- Another reason is that it provides more information for SEOs to glean from researching images on the SERP.

- This assists in determining the credibility of the source. You can ask yourself if you believe the image is being interpreted as intended. Or does it represent misinformation?

- It is useful for fact-checking research. Did it come from the location the source stated? Did it distort the narrative in its favor? Is it altered in a way that misrepresents information?

It is worth noting that in preparation for the 2024 US presidential election, OpenAI launched a tool that can detect (unaltered) DALL-E3 images with 98% accuracy – without Watermarking. This is to counter the surge in fake images and media alterations.

“The ChatGPT creator also plans to add tamper-resistant watermarking to mark digital content such as photos or audio with a signal that should be hard to remove.” – OpenAI’s new tool can detect images created by DALL-E 3 [2]

More recent versions of ChatGPT can interpret images. You can upload a screenshot or picture of data or text, and it can provide you with a description of the picture. You can screenshot a tweet and ask what the tone of the message is.

How many images should be in an article?

No specific number of images to include in your articles can be specified. It is better to try adding one photo after every 250 to 450 words. Knowing that images make the content more approachable and engaging for readers, AB tests show it is often best to add more. For example, readers will expect more images if the article is about creating a beautiful landscape.

A page with only one featured image can look barren and be hard to read. High-quality photos break up text and appeal to users. They increase the ease of marketing the page. However, too many will bog page load speed, which may trigger higher bounce rates.

A page with multiple, well-optimized images can load faster than one with a single, large image file. Finding a middle ground for page images depends on many factors. Rather than being thin in imagery, consider various techniques that trim image load times. You can also host your images on a Content Delivery Network (CDN).

A CDN caches images at edge servers. They sit between the web server and the user. An edge server geographically closer to the searcher will deliver data faster. It also reduces the image file access burden on the main server.

Why is Optimizing for Google Image Search Important?

By leveraging high-converting images, you have the potential to significantly boost your website traffic and reach a more targeted audience.

Many people search by image

TrueList reported on 2.17.2024 that “Around 1 billion people use Google Images daily.” Author Branka reports in Google Search Statistics – 2024 that 10.1% of Google traffic comes from images.google.com. People use Google Image Search and click on it approximately 1 billion times daily. Think of that staggering volume of searches – nearly 11.5k every second. Google Search has already indexed around 10 billion images to help enrich its SERPs. [3]

“To help you uncover the information you’re looking for — no matter how tricky — we created an entirely new way to search with text and images simultaneously through Multisearch. Now, you can snap a photo of your dining set and add the “coffee table” query to find a matching table. First launched in the U.S., Multisearch is now available globally on mobile, in all languages and countries where Lens is available.” – Go beyond the search box: Introducing multisearch [4]

Understand semantic similarity: The advancement of matching queries into similar forms signals that search engines’ semantic understanding of content is crucial. SEO should consider the intent and contextual relevance of articles, and enrich content with semantic triples. This helps feed your Knowledge Panel.

How you use images can influence your Google Search Console data. When an image from your site appears in a knowledge panel, it may trigger a significant increase in impressions. This may not accompany click data.

Resolving Image Downscaling and Object Fit Issues

Often, someone uploads an image and uses it in a publication without understanding size fit.

When seeking to fix image down-scaling and “object-fit” issues, the primary concern is ensuring an image is resized to fit within its container while maintaining its aspect ratio, without distortion. This can be accomplished by using the appropriate “object-fit” value in CSS. You can select from “contain”, “cover”, or “scale-down” depending on your starting point and desired outcome.

Things to know about image down-scaling and object-fit:

- Object-fit property: This CSS property instructs browsers about how an image is resized to fit within its container.

- Common object-fit values:

- “contain”: The image is scaled down to fit entirely within the container while preserving its aspect ratio, potentially leaving empty space around it.

- “cover”: The image fills the entire container while maintaining its aspect ratio, but may crop parts of the image that don’t fit.

- “scale-down”: The image is scaled down to the smaller size between its original size and the size that would fit using “contain”.

Image Structured Data Dives Targeted Web Traffic

With image SEO schema data, Google can display your images in specified rich results. This may include a prominent badge in Google Images, which gives searchers relevant information about your page.

The tech giant currently offers badges for recipes, videos, products, and animated images (GIFs).

Using images in your content helps users identify the type of content associated with the image. They explain your concepts visually. By implementing appropriate structured data on your pages, individuals find relevant content quickly, as do search engines. In this way, you can drive better-targeted traffic to your website.

While certain rich results feature a prominent image, a separate image schema just for images doesn’t exist. This is because certain SERP displays are better suited to visual results. For example, a Google Product Carousel is one rich result type that displays a sequential list or scrollable gallery. This is when images span most of the result.

Google’s 3D Image markup

On March 25, 2024, Google added this image markup feature. It explained that: “Sometimes 3D models appear on pages with multiple products and are not clearly connected with any of them. This markup lets site owners link a 3D model to a specific product.”. It also now supports 3D models in Merchant Center product structured data.

Providing multiple images at different resolutions within your structured data can improve how your content looks in various search results. Consider that ImageObject is important to image schema. This goes along with providing context in your alt text and image file URL to support better results in image search. Avoid using both IPTC and structured data so that you don’t end up with conflicting field values.

Comparing Google, Yahoo, and Bing Image Search

For the search query “alleviate the burden of TMD on individuals and healthcare systems,” Yahoo produced an exceptional image-rich SERP. (Yahoo searches are powered by Bing. However, this doesn’t imply that their search results are exactly the same. In this case, Bing surfaced results without images.) For the same query, I was unable to generate any images in Google SERPs, whether on desktop or mobile or in traditional search results or logged in to Search Generative Experience.

Google Search Generative Experience can also generate images. It functions similarly to other AI-generation tools but only within the new experimental search interface.

Google Vertex AI, Circle to Search, and Google Lens

How are Google Vertex AI, Circle to Search, and Google Lens different from each other?

- Google Vertex AI: Vertex AI is a fully-managed, unified AI development platform for building and deploying generative AI where you can generate answers about uploaded images to build next-gen AI applications. You can customize by leveraging multiple tuning options for Google’s text, image, or code models. [5]

- Circle to Search: This Google feature is available on later models of Android phones. People can circle, highlight, scribble, or tap text or images anywhere on their screen to select and search for more about the text, image, or video on Google. [6]

- Google Lens: Google Lens captures an image and then compares the picture with similar images to determine the most accurate result. AI-powered capability in Google Lens seeks to improve visual search results by delivering the results in a different format.

Google’s gesture-powered “Circle to Search” was announced on January 31, 2024, at the Samsung Galaxy S24 series launch. Google Lens multisearch was first introduced in April 2022 to a US-only beta group. While it also offers generative AI insights, it’s not the same product as Google’s experimental GenAI search SGE, which remains opt-in only.

Google also states that is is adding CodeGemma to Vertex AI, a newer model from its Gemma family of lightweight models. With each new update, these tools are getting better at delivering richer and more compelling visuals. AI Studio is an adaptable toolbox within which Gemini Pro 1.5 is one powerful tool.

I highly recommend trying AI overviews for multisearch in Lens. Also, being a JSON-LD coder, it fun to experiment with how Gemini converts image text to this structured data format.

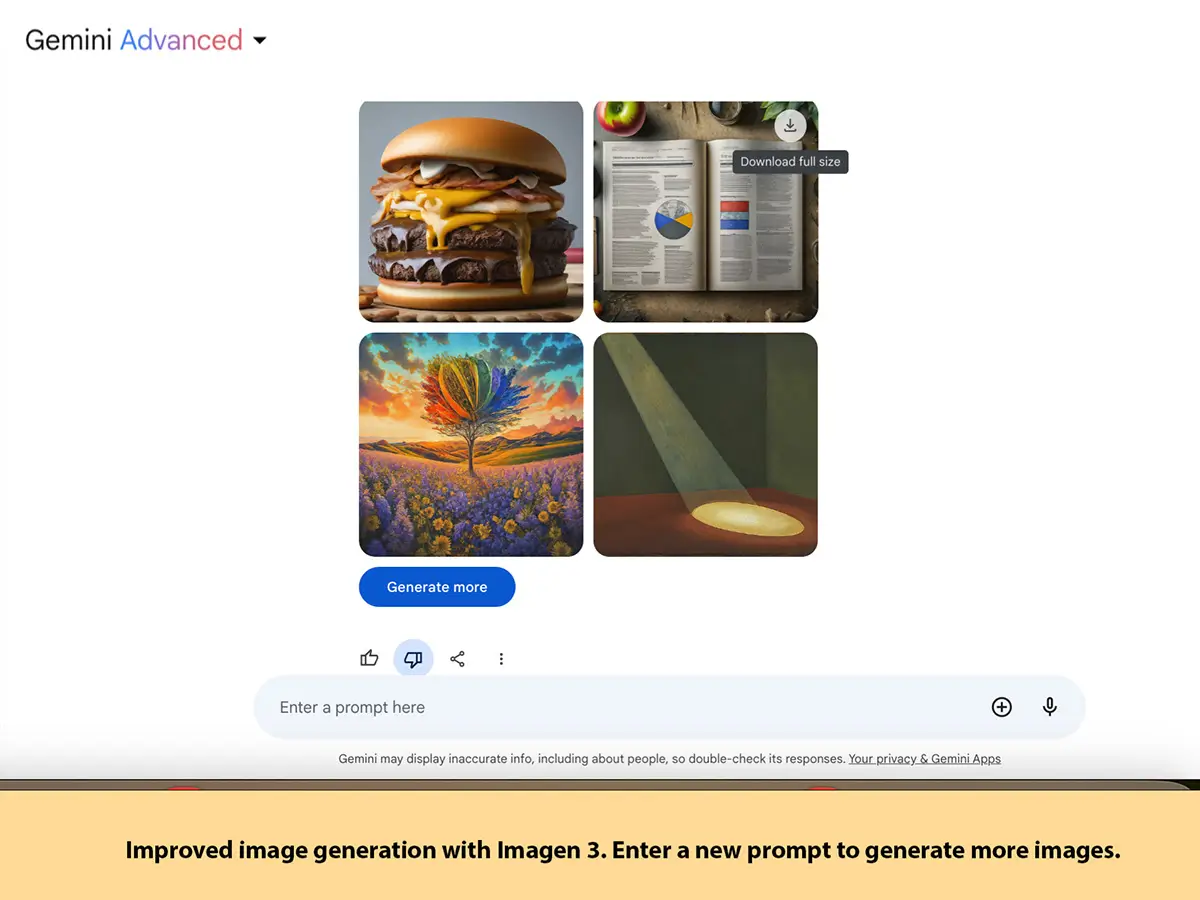

Recommended next-generation AI products for AI image generation:

AI image tools, according to my favorites and limited experience:

- DALL-E 3.

- Gemini Advanced – Imagen 3.

- Adobe Firefly.

- Midjourney.

- Stable Diffusion.

- JumpStory.

- Google Vertex AI.

I also like Claude 3 Opus.

This is what the Google SERP returned for my query “What is the best AI image generator?” In the HTML code, the class is “webanswers-table.”

| AI image generator | Price | Output speed |

|---|---|---|

| Gemini Advanced – Imagen 3 | $20 per month | Fast |

| Image Creator from Microsoft Designer (formerly Bing Image Creator) | Free | Fast |

| DALL-E 3 by OpenAI | $20 per month | Fast |

| ImageFX by Google | Free | Fast |

| DreamStudio by Stability AI | Free + Credits | Fast |

If I click on “5 more rows | Apr 9, 2024”, it takes me to The best AI image generators to try right now by ZDNET.

Actually, it surprised me that it didn’t first reference Google Imagen. It placed a table on the SERP straight from the ZDNET article. AI Studio and Gemini Pro 1.5 are both Google-powered and owned tools that leverage Google’s advanced language models. They have different purposes, a few key distinctions, and outputs.

Google Imagen creates ‘live photos’

As of April 10, 2024, users can access this Google image-creation tool to generate text-to-live photos, the company said in a blog post. This includes creating four-second videos at a resolution of 360 pixels by 360 pixels and 24 frames per second. All you have to do is input text. It works if you’re text prompt suffices for it to draw up results.

“It’s adept at themes such as nature, food imagery, and animals. It can generate a range of camera angles and motions while supporting consistency over the entire sequence.” – Google Cloud announces updates to Gemini, Imagen, Gemma and MLOps on Vertex AI

Google accepts AVIF image format

The Google Search Central Blog announced August 2024 that they are now supporting AVIF image extensions in Google Search. These AVIF images are of significantly less weight while maintaining high resolution images that are crisp and clear. The same image compressed to 35.4 KB AVIF from a 111 KB JPG.

AVIF is an open-source, royalty-free image compression standard that offers better quality and compression than traditional formats like JPEG and PNG. Google’s support for AVIF could have a significant impact on SEO, as it can improve Core Web Vitals scores and lower image sizes.

How Does Gemini Convert Image Text to JSON?

Google Gemini can interpret visual graphs and quickly convert them into appropriate JSON tag structures. Its AI capabilities can input a photo of a plate of scones and generate a written recipe as a response. It also works if you input a recipe and request a corresponding image. [7]

Gemini can understand any input type, combine different types of information, and generate almost any output. You can prompt and test in Vertex AI with Gemini by text, image, video, or code. Use Gemini’s advanced state-of-the-art generation capabilities for extracting text from images, converting image text to JSON, and generating answers about uploaded images to build next-gen AI applications.

AI lets you gain structured data extraction from an image using Claude 3 Opus. Simply upload an image of a document form and it generates a web version using an LLM implementation that is handled by this tools schema.

I’ve long been interested in how Google image search works and how significant it will be in the future. Patents lend us clues.

Google Patents to Improve Relevance, Quality, and Diversity of Image Search Results

We learn here how Google may categorize search queries based on the analysis of image search results. By receiving images from image search results, along with user behavior data, it may annotate these images based on this content analysis. This refines how it can provide better tailored search that is aligned to user intent.

2023-12-21 was the publication of US20230409653A1 of a new Google patent: Embedding Based Retrieval for Image Search. This patent describes a method for finding relevant image search results based on the similarity of their embeddings to the query’s embedding, enabling more effective and efficient image search.

“Methods, systems, and apparatus including computer programs encoded on a computer storage medium, for retrieving image search results using embedding neural network models. In one aspect, an image search query is received. A respective pair numeric embedding for each of a plurality of image-landing page pairs is determined. Each pair numeric embedding is a numeric representation in an embedding space. An image search query embedding neural network processes features of the image search query and generates a query numeric embedding. The query numeric embedding is a numeric representation of the image search query in the same embedding space. A subset of the image-landing page pairs having pair numeric embeddings that are closest to the query numeric embedding of the image search query in the embedding space are identified as first candidate image search results.” – Embedding Based Retrieval for Image Search

“A diverse/homogeneous query classifier 133 b takes as input a query and outputs a probability that the query is for an image that is diverse. In some implementations, the classifier 133 b uses a clustering algorithm to cluster image results 130 according to their fingerprints based on a measure of distance from each other. Each image is associated with a cluster identifier. The image cluster identifier is used to determine the number of clusters, the size of the clusters and the similarity between clusters formed by images in the result set.” – Query categorization based on image results, July 2022

Released March 19, 2024, Google revealed what it’s image research is focusing on in ScreenAI: A visual language model for UI and visually-situated language understanding [10].

“By combining the natural language capabilities of LLMs with a structured schema, we simulate a wide range of user interactions and scenarios to generate synthetic, realistic tasks. In particular, we generate three categories of tasks:

Question answering: The model is asked to answer questions regarding the content of the screenshots, e.g., “When does the restaurant open?”

Screen navigation: The model is asked to convert a natural language utterance into an executable action on a screen, e.g., “Click the search button.”

Screen summarization: The model is asked to summarize the screen content in one or two sentences.”

While the tech giant works on advancing its image technology, there are simple things SEO’s can do.

More Ways to Improve Web Images

Google Search uses image alt text

This important image attribute lets you provide more metadata for an image. Text that describes an image also improves accessibility for non-visual people so they can understand images on web pages. This includes screen readers or people with low-bandwidth connections.

Google leverages alt text in tandem with computer vision algorithms and the page’s contents to understand the image’s subject matter. Creating useful, information-rich content that uses keywords appropriately to support the page’s content. Avoid filling and stuffing alt attributes, as it may cause a negative user experience or trigger a spam alert.

WordPress 6.3

This WordPress update comes with new enhancements to image loading. This causes up to 21% improvement in loading time for any WordPress page using a hero image. Slow load times are often the cause of lost viewers. Fast-loading images show up more often in Google HowTo and FAQ search results.

Be aware that “Near Me” search queries can pull images from Google Business profiles. So, even with a slow site, images used in Google Posts, for example, may still win you a click through Google Image Search.

Google Workspace Labs

Business Workspace accounts let you create original images from text within Google Slides. The same can be done in Google Slides. [8]Iterative prompts yield better results. Provide sufficient information about you subject, setting, distance to subject, materials, or background. For example: “A close up of a pickleball paddle made out of polyumer and Carbon fiber held by a lady on at outdoor court near a lake at sunset.”

Microsoft Teams Chat

Chat’s code interpreter can resize images and provide related code. You can receive a different resolution file back than the one that was sent. This is one way to get the correct image size you want and with good resolution for web content.

Google Advanced Image Search Tool

I really like this tool. [9] Have some fun and try it. I like this when finding it challenging to describe my search using words. It is one aspect of “Multisearch.”

This means of searching can place the image pack at the top of SERPS. We find this occurs more often on mobile. If your image gains placement in the pack, your search impressions can spike.

Google’s Pixel 8 Audio Magic Eraser

Computer vision embeddings may signal important features such as the occurrence of certain objects, shapes, colors, or other visual patterns. Google’s Pixel 8 Audio Magic Eraser is an AI-powered editing feature that reduces distracting video sounds. This feature uses machine learning to identify and sort sounds into different layers that you can control.

Google Text Result Images

Google Text Result Images or SERP thumbnail images can be generated automatically from whatever its bot crawls first. You may gain more than one image displayed, especially for e-commerce sites. A savvy SEO can help businesses encourage specific images to appear for the given query.

A simple tap on a text result image takes you to the web page that is embedding the image. They appear more often for image-seeking queries. For more complex question-answer query types, you can look up both images and text at the same time with Google multi-search.

Google’s Ad Product Studio tool

Its new Ad Product Studio tool offers generative AI to help businesses of all sizes create a range of high-quality product imagery. It can generate custom product scenes that align with seasonal or trending opportunities. With a click, you can remove distracting backgrounds and improve the image resolution and sharpness without costly photo shoots.

How Images on Your Website Can Lead to CRO

Images nab searchers’ gaze on SERPs. It helps you stand out from others. You can benefit from the multi-image thumbnail treatment for eCommerce category pages. Google Shopping Results are image-based.

Neural Object Decomposition is a process that separates objects in an image or video into individual, bite-size components. You can win your market share of SERPs that incorporate images and rich results and build your authority simultaneously. If you create visual assets that generate links, it’s a natural form of backlink profile building.

Image captioning helps CRO through better:

- Site accessibility.

- Better image search rankings.

- Improved site visibility.

- Aids content comprehension of your site by crawlers.

Google may provide a carousel of sites below its AI answers in search results. Sometimes, it has a “Read more” label or may appear in “Related Searches.” Google’s Related Search Carousel is a list of similar search terms that are displayed at the bottom of the SERP. It can include an image or icon for each query and can appear for brands, programs, cities, and places.

SUMMARY: Images in Google AI-powered Multisearch Experiences

Hill Web Marketing helps businesses include images into their SEO schema markup to increase the likelihood of gaining visual rich snippets. New ways emerge where you can win extra visibility through other search features.

Are you ready to improve your image search capabilities? Contact Hill Web Marketing

Resources:

[1] https://techcrunch.com/2023/10/12/googles-ai-powered-search-experience-can-now-generate-images-write-drafts/

[2] https://www.fastcompany.com/91120099/openai-new-tool-detect-images-created-by-dall-e-3

[3] https://truelist.co/blog/google-search-statistics/

[4] https://blog.google/products/search/multisearch/

[5] https://cloud.google.com/vertex-ai

[6] https://support.google.com/websearch/answer/14508957

[7] https://cloud.google.com/use-cases/multimodal-ai

[8] https://support.google.com/docs/answer/13635180

[9] https://www.google.com/advanced_image_search

[10] https://research.google/blog/screenai-a-visual-language-model-for-ui-and-visually-situated-language-understanding/